Seven Winning Strategies To make use Of For Deepseek

페이지 정보

본문

DeepSeek v3 proves to be highly environment friendly in this regard. The first DeepSeek fashions were basically the identical as Llama, which were dense decoder-solely Transformers. A decoder-only Transformer consists of multiple identical decoder layers. Fire-Flyer 2 consists of co-designed software program and hardware structure. The structure was essentially the identical as the Llama series. On 29 November 2023, DeepSeek launched the DeepSeek-LLM sequence of models. On 2 November 2023, DeepSeek site released its first mannequin, DeepSeek Coder. Deepseek Coder V2: - Showcased a generic function for calculating factorials with error handling utilizing traits and higher-order features. It’s a really capable mannequin, but not one that sparks as much joy when using it like Claude or with super polished apps like ChatGPT, so I don’t anticipate to maintain utilizing it long term. Why this matters - intelligence is the most effective protection: Research like this both highlights the fragility of LLM expertise in addition to illustrating how as you scale up LLMs they appear to grow to be cognitively succesful sufficient to have their own defenses against weird assaults like this.

DeepSeek v3 proves to be highly environment friendly in this regard. The first DeepSeek fashions were basically the identical as Llama, which were dense decoder-solely Transformers. A decoder-only Transformer consists of multiple identical decoder layers. Fire-Flyer 2 consists of co-designed software program and hardware structure. The structure was essentially the identical as the Llama series. On 29 November 2023, DeepSeek launched the DeepSeek-LLM sequence of models. On 2 November 2023, DeepSeek site released its first mannequin, DeepSeek Coder. Deepseek Coder V2: - Showcased a generic function for calculating factorials with error handling utilizing traits and higher-order features. It’s a really capable mannequin, but not one that sparks as much joy when using it like Claude or with super polished apps like ChatGPT, so I don’t anticipate to maintain utilizing it long term. Why this matters - intelligence is the most effective protection: Research like this both highlights the fragility of LLM expertise in addition to illustrating how as you scale up LLMs they appear to grow to be cognitively succesful sufficient to have their own defenses against weird assaults like this.

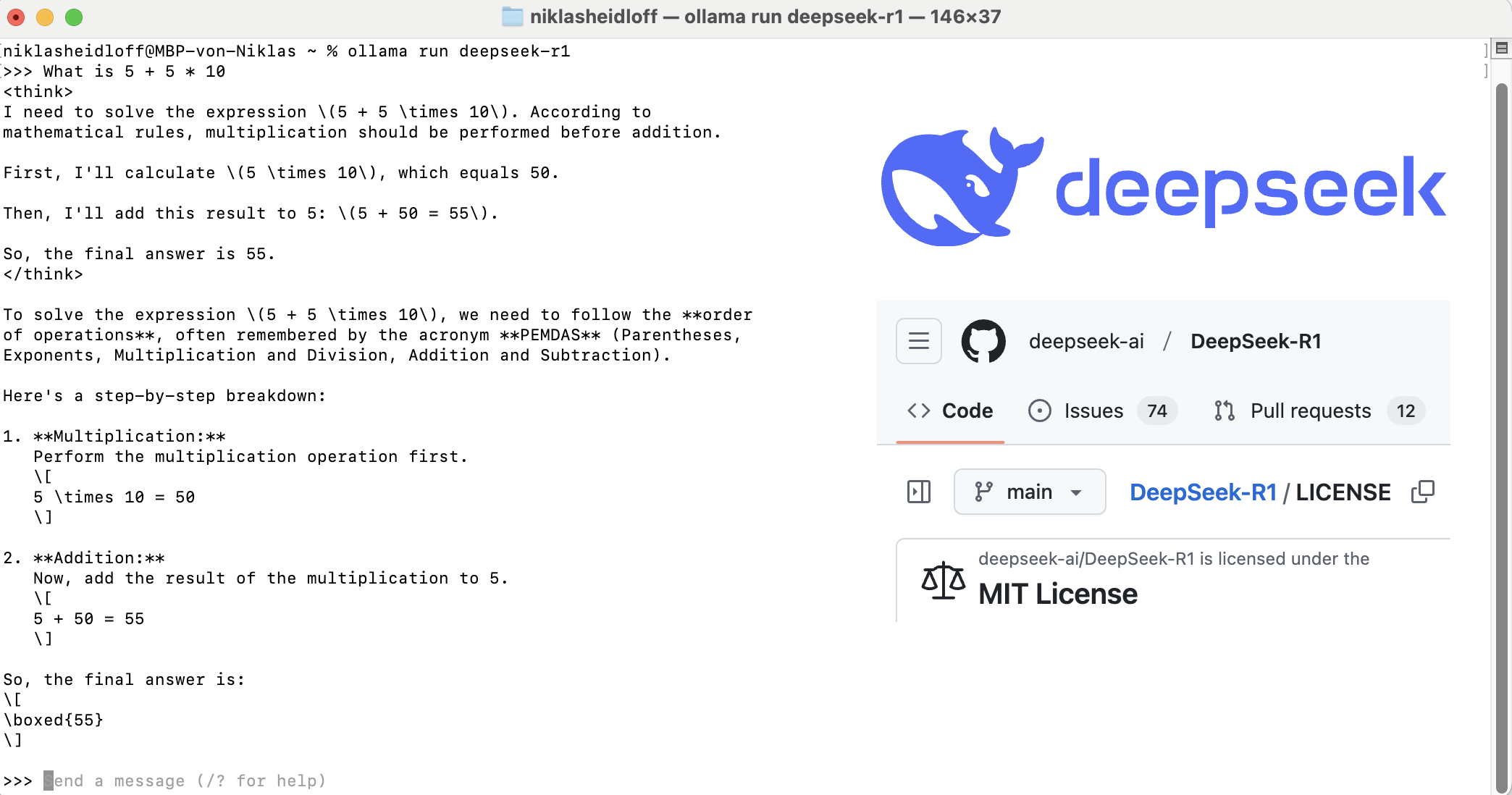

This year we have now seen important enhancements on the frontier in capabilities in addition to a model new scaling paradigm. However, it is important to notice that Janus is a multimodal LLM capable of producing textual content conversations, analyzing pictures, and producing them as effectively. Software Development: R1 might assist builders by producing code snippets, debugging existing code and offering explanations for complex coding ideas. DeepSeek's hiring preferences target technical talents reasonably than work experience; most new hires are both latest college graduates or builders whose AI careers are much less established. Once I'd labored that out, I needed to do some prompt engineering work to stop them from placing their own "signatures" in entrance of their responses. This resulted in the released version of Chat. In April 2024, they released 3 DeepSeek-Math fashions: Base, Instruct, and RL. It contains 3 models: Base, Instruct, and RL. The free plan contains fundamental features, whereas the premium plan gives advanced tools and capabilities. In standard MoE, some experts can turn into overused, while others are not often used, wasting house. Meanwhile, the FFN layer adopts a variant of the mixture of experts (MoE) method, successfully doubling the number of consultants in contrast to straightforward implementations. In distinction to straightforward Buffered I/O, Direct I/O doesn't cache data.

This year we have now seen important enhancements on the frontier in capabilities in addition to a model new scaling paradigm. However, it is important to notice that Janus is a multimodal LLM capable of producing textual content conversations, analyzing pictures, and producing them as effectively. Software Development: R1 might assist builders by producing code snippets, debugging existing code and offering explanations for complex coding ideas. DeepSeek's hiring preferences target technical talents reasonably than work experience; most new hires are both latest college graduates or builders whose AI careers are much less established. Once I'd labored that out, I needed to do some prompt engineering work to stop them from placing their own "signatures" in entrance of their responses. This resulted in the released version of Chat. In April 2024, they released 3 DeepSeek-Math fashions: Base, Instruct, and RL. It contains 3 models: Base, Instruct, and RL. The free plan contains fundamental features, whereas the premium plan gives advanced tools and capabilities. In standard MoE, some experts can turn into overused, while others are not often used, wasting house. Meanwhile, the FFN layer adopts a variant of the mixture of experts (MoE) method, successfully doubling the number of consultants in contrast to straightforward implementations. In distinction to straightforward Buffered I/O, Direct I/O doesn't cache data.

On Thursday, US lawmakers began pushing to instantly ban DeepSeek from all authorities gadgets, citing nationwide safety issues that the Chinese Communist Party may have built a backdoor into the service to access Americans' sensitive private information. DeepSeek-V2 was released in May 2024. It offered performance for a low price, and became the catalyst for China's AI model value war. 1. Error Handling: The factorial calculation may fail if the input string can't be parsed into an integer. In January 2024, two DeepSeek-MoE fashions (Base and Chat) were released. On 27 January 2025, DeepSeek launched a unified multimodal understanding and generation model referred to as Janus-Pro. 5 On 9 January 2024, they launched 2 DeepSeek-MoE models (Base and Chat). On 20 November 2024, DeepSeek-R1-Lite-Preview became accessible through API and chat. I don’t know the place Wang got his data; I’m guessing he’s referring to this November 2024 tweet from Dylan Patel, which says that DeepSeek had "over 50k Hopper GPUs". Underrated factor however data cutoff is April 2024. More reducing recent events, music/film suggestions, innovative code documentation, research paper information assist. We also evaluated well-liked code models at different quantization levels to determine which are best at Solidity (as of August 2024), and compared them to ChatGPT and Claude.

User feedback can supply precious insights into settings and configurations for the most effective results. DeepSeek’s outstanding outcomes shouldn’t be overhyped. Even President Donald Trump - who has made it his mission to come back out ahead towards China in AI - known as DeepSeek’s success a "positive growth," describing it as a "wake-up call" for American industries to sharpen their competitive edge. Wait, you haven’t even talked about R1 yet. In December 2024, they released a base mannequin DeepSeek - V3-Base and a chat mannequin DeepSeek-V3. Reinforcement studying (RL): The reward mannequin was a process reward mannequin (PRM) trained from Base according to the Math-Shepherd methodology. Researchers on the Chinese AI firm DeepSeek have demonstrated an exotic technique to generate artificial data (information made by AI fashions that may then be used to practice AI fashions). 3. Supervised finetuning (SFT): 2B tokens of instruction knowledge. 1. Pretrain on a dataset of 8.1T tokens, utilizing 12% extra Chinese tokens than English ones. 1. Pretraining: 1.8T tokens (87% supply code, 10% code-associated English (GitHub markdown and Stack Exchange), and 3% code-unrelated Chinese). 2. Long-context pretraining: 200B tokens.

If you cherished this article therefore you would like to get more info relating to شات DeepSeek kindly visit the site.

- 이전글The ADHD Diagnosis Private UK Awards: The Best, Worst And The Most Bizarre Things We've Seen 25.02.10

- 다음글Private ADHD Diagnosis UK Cost: The Good, The Bad, And The Ugly 25.02.10

댓글목록

등록된 댓글이 없습니다.